Ying Ma

Assistant Professor

Department of Electrical and Computer Engineering

University of Central Florida

Orlando, FL, 32816

Email: firstname dot lastname at ucf dot edu

Office: HEC 317A

Assistant Professor

Department of Electrical and Computer Engineering

University of Central Florida

Orlando, FL, 32816

Email: firstname dot lastname at ucf dot edu

Office: HEC 317A

I joined the Department of Electrical and Computer Engineering at the University of Central Florida in Orlando as an assistant professor in August 2021. I received my Ph.D. degree in 2021 from the University of Florida under the supervision of Prof.Jose Principe. During my Ph.D., I worked as a research summer intern at Apple Siri Understanding in 2019 and at Google in 2020 and 2021. Previously, I was a visiting student at University of Southampton in 2015, where I was an advised by Prof.Lajos Hanzo and Prof.Sheng Chen.

My primary research interest is deep learning (in particular, reinforcement learning and multimodal machine learning) with applications to natural language processing (NLP) and computer vision (CV). My research focuses on developing new ML architectures to bridge the gap between theoretical developments and real-world applications.

We are hiring students!

I am looking for strong and highly motivated Ph.D. students who are interesting in machine learning and deep learning. If you are interested in working with me, please submit your application to ECE, UCF and send me your CV and sample publications if any.

For students at University of Central Florida, multiple paid part-time and volunteer research assistant positions are available. If you are interested, please send me your CV.

Our primary research interest is deep learning (in particular, reinforcement learning and multimodal machine learning) with applications to natural language processing (NLP) and computer vision (CV).

Video Description

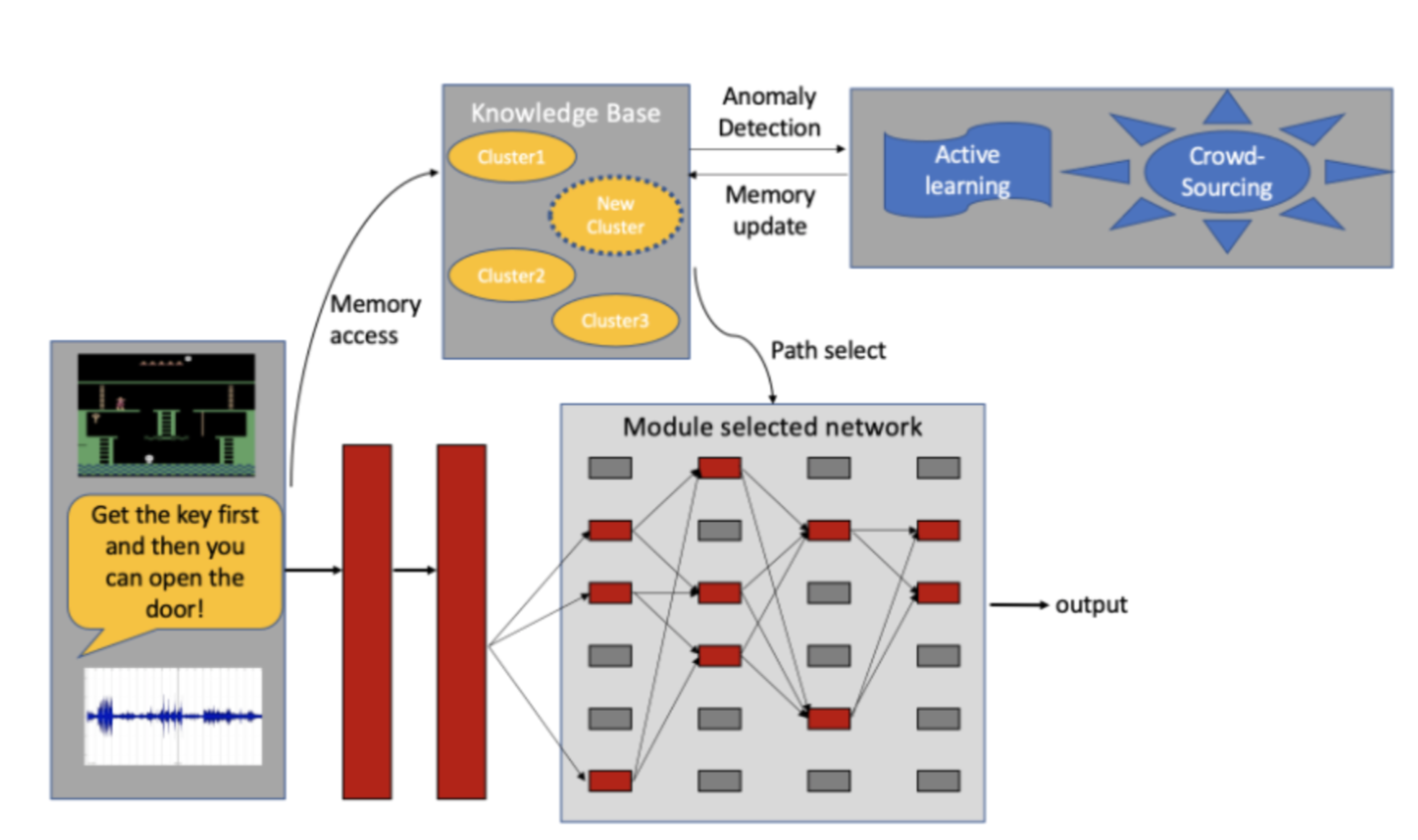

This system employs a self-organizing

object detector as a front end to

deconstruct the environment. First, the

environment is decomposed into objects;

then, our IMRL (internal memory augmented RL) network is adopted to

learn the objects’ affordance (i.e., a

sequence of actions to manipulate these

objects). The learned objects, along with

their properties, are stored in the external

memory and utilized when those same

objects are detected in an unseen environment. This autonomous vision system is one step closer

to lifelong learning because it learns continuously during execution and becomes increasingly

adept at performing tasks without forgetting its previous experiences.

Y.Ma, J.Brooks, H.Li, J. Principe, “Procedural Memory Augmented Deep Reinforcement

Learning,” accepted by IEEE Trans. Artificial Intelligence. (TAI).

H.Li, Y.Ma, J.C.Principe, “Cognitive Architecture for Videogames,” IEEE IJCNN 2020.

Image Description

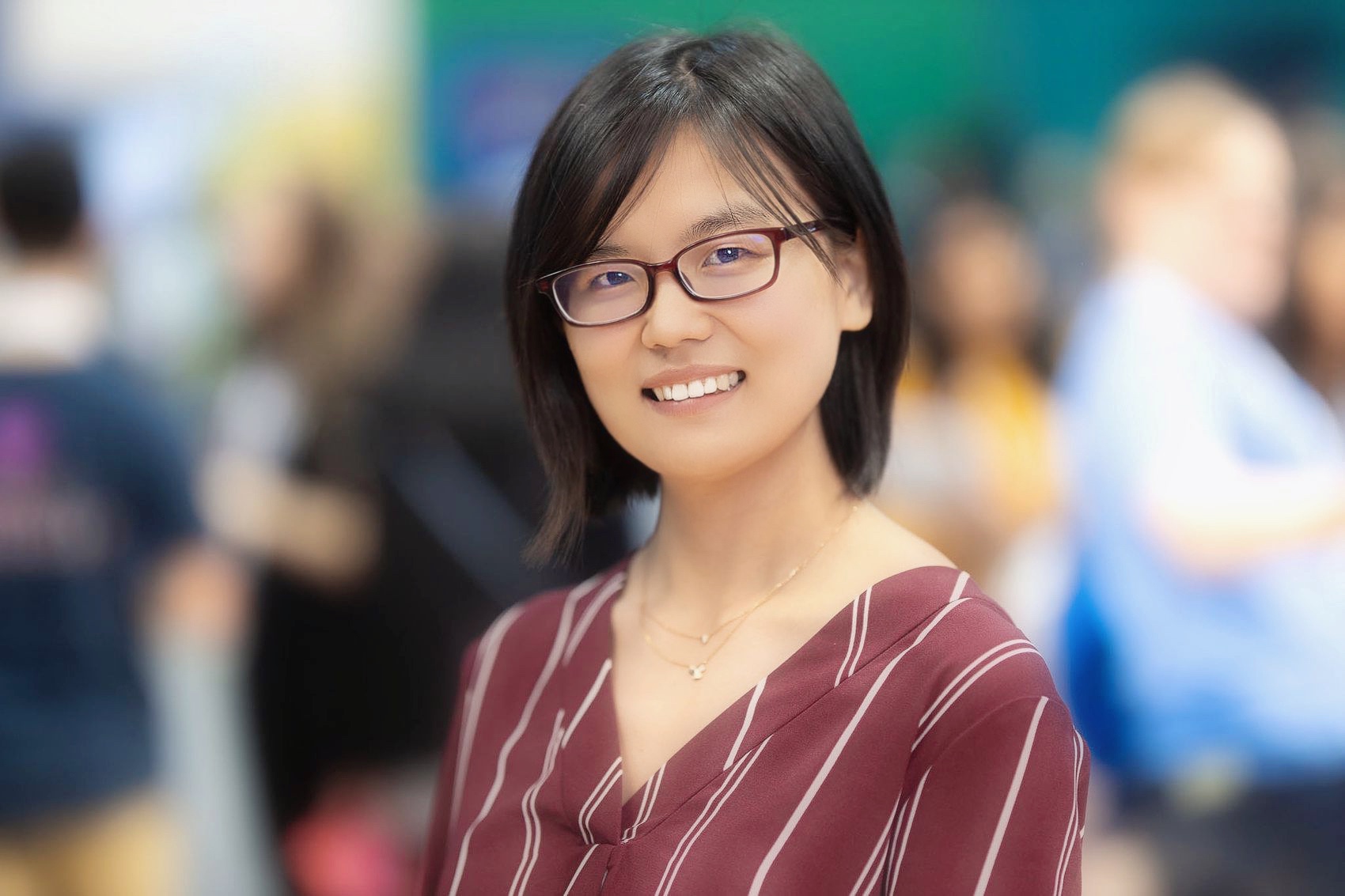

Many dynamic neural networks with new memory structures have recently emerged to address these problems. We proposed a systematic approach [Tax] to analyze and compare the underlying memory structures of popular recurrent neural networks, including vanilla RNN, LSTM, neural stack [NS] and neural Turing machine, neural attention models, etc. Our analysis provided a window into the “black box” of recurrent neural networks from a memory usage prospective. Accordingly, we developed a taxonomy for these networks and their variants, and revealed their intrinsic inclusion relationships mathematically. To help users select an appropriate architecture, we also connected the relative expressive power of models to the memory requirements of different tasks, including sentiment analysis, question answering, and others.

[Tax] Y. Ma, J. Principe. “A Taxonomy for Neural Memory Networks,” IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), vol. 31, no. 16, pp. 1780-1793, 2020.

[NS] Y. Ma, J. Principe, “Comparison of Static Neural Network with External Memory and RNNs for Deterministic Context Free Language Learning,” IEEE IJCNN 2018

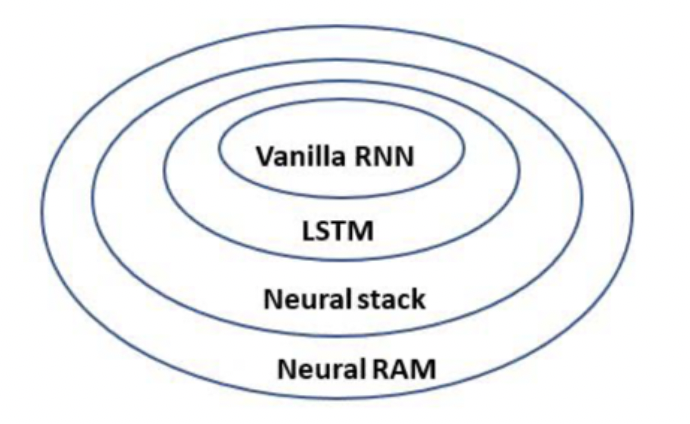

Image Description

We augmented the current deep RL networks by introducing an extra gate mechanism. In addition, we introduced an internal reward mechanism to promote longer macro actions. Using these two techniques, our agent can interact less with the environment and explore in a more structured manner, thereby obtaining higher cumulative reward. These two techniques can be applied to all deep RL architectures. In our experiments, we include these two techniques in an Asynchronous Actor-Critic Agents (A3C) network and test them with Atari games. The results show improvements in terms of computational efficiency and performance.

Joint treatment of multiple modalities utilize more comprehensive information, therefore achieving better performance. This project aims to learn informative but concise representations from unlimited multimodal data by utilizing an external memory. We particularly focus on applications such as autonomous driving (image, Lidar point cloud and HD map), recommendation system (text and image) and chatbot (image, audio).

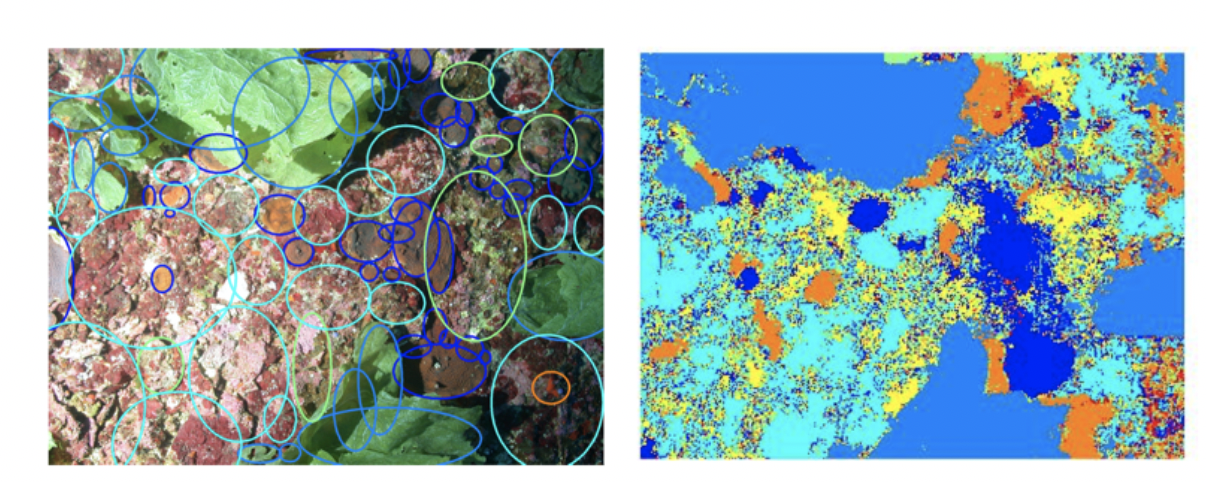

To recognize

biota and coral distributions at Pully

Ridge HAPC, a mesophotic reef in the

Gulf of Mexico, we developed

convolutional neural network-based

image segmentation and classification methods to recognize the amount and health states of

corals with sparse labels [coral1][coral2]. As an extension of this work, we applied our sequential neural

network to learn plant-development syntactic patterns and automatically identify plant species

[coral3]. This is an important first step not only for identifying plants but also for automatically

generating realistic plant models from observations.

[coral1] Y. Xi, Y. Ma, S. Farrington, J. Reed, B. Ouyang, J. Principe, “Fast Segmentation for Large and

Sparsely Labeled Coral Images,” IEEE IJCNN 2019.

[coral2] Y. Ma, B. Ouyang, S. Farrington, S. Yu, J. Reed, J. Principe, “Joint Segmentation and

Classification with Application to Cluttered Coral Images,” IEEE GlobalSIP 2019.

[coral3] K. li, Y. Ma, J. Principe, “Automatic Plant Identification Using Spatio-Temporal Evolution Model

(STEM) Automata,” IEEE MLSP, 2017.

Image Description

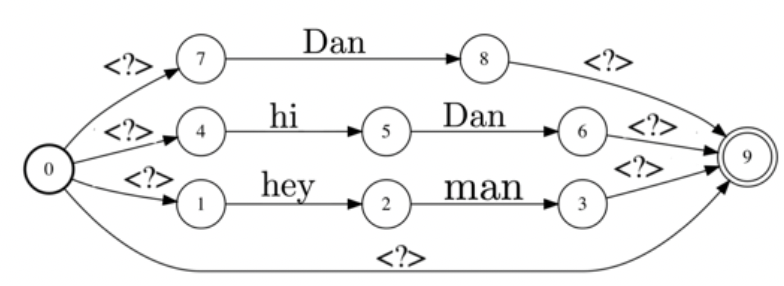

Graph neural networks are an extension of sequential

neural networks for non-Euclidean data structures. In this

work, we address the task of false trigger mitigation and

intent classification based on analyzing automatic speech

recognition lattices using graph neural networks (GNNs), i.e., graph convolutional neural networks

and graph attention neural networks. This work was used to improve the performance of Apple’s

Siri application.

P.Dighe, S.Adya, N.Li, S.Vishnubhotla, D.Naik, A.Sagar, Y.Ma, S.Pulman, J.Williams,

“Lattice-based Improvements for Voice Triggering Using Graph Neural Networks,” IEEE ICASSP

2020.

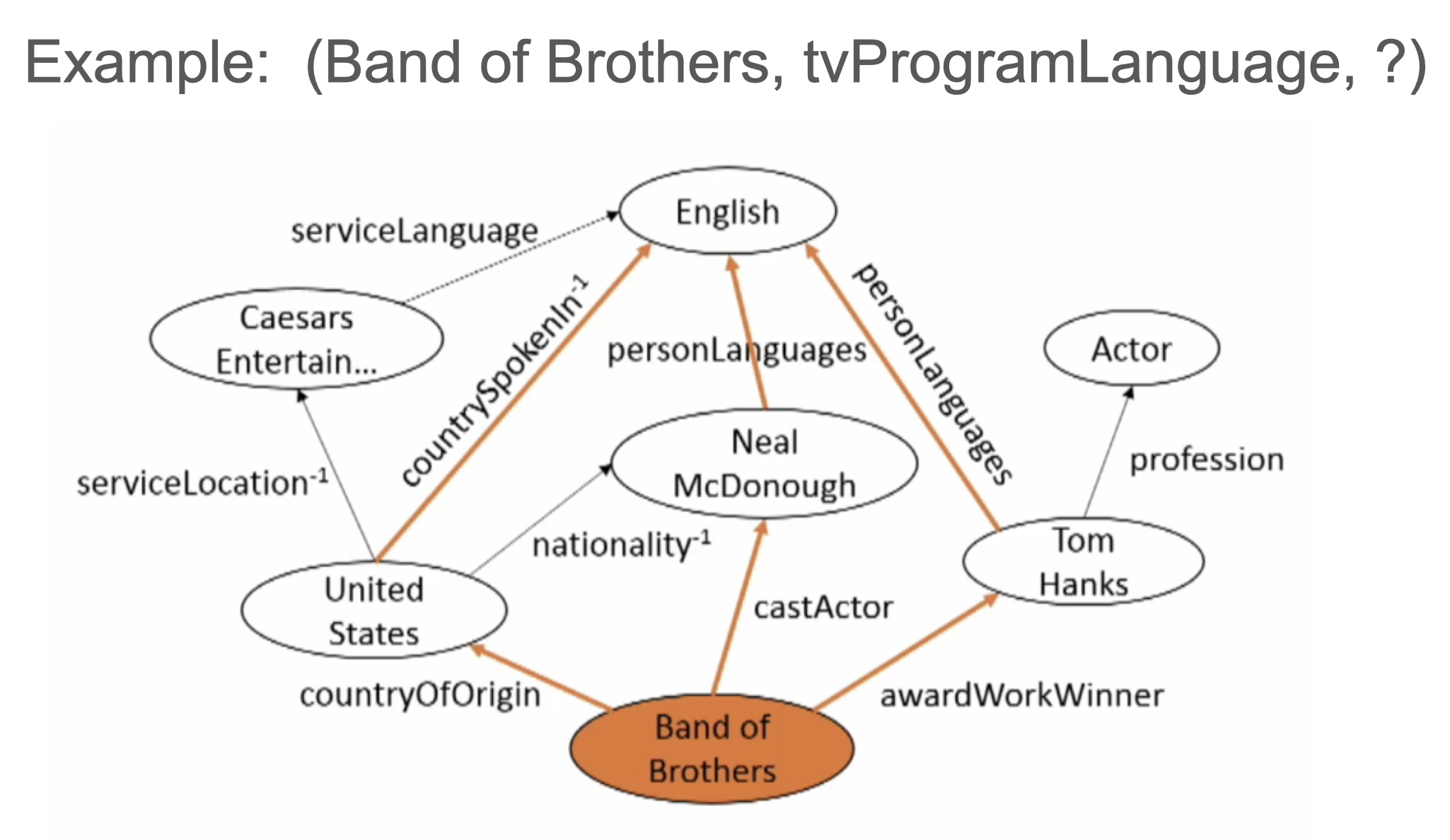

Image Description

We applied deep RL network to navigate a knowledge graph conditioned on the input question by defining a Markov decision process on the knowledge graph. An agent travels from a starting vertex to the ending vertex by choosing edges to find predictive paths and answers. Different from other environments, the knowledge graph has a large action space and missing edges. To customize to this application, we combined our RL network with the traditional embedding-based method for QA on KG to obtain better performance.

Master and Undergraduate Students

Research Interest: Reinforcement Learning

Research Interest: Question Answering on Knowledge Graph

Research Interest: Reinforcement Learning

Research Interest: Reinforcement Learning

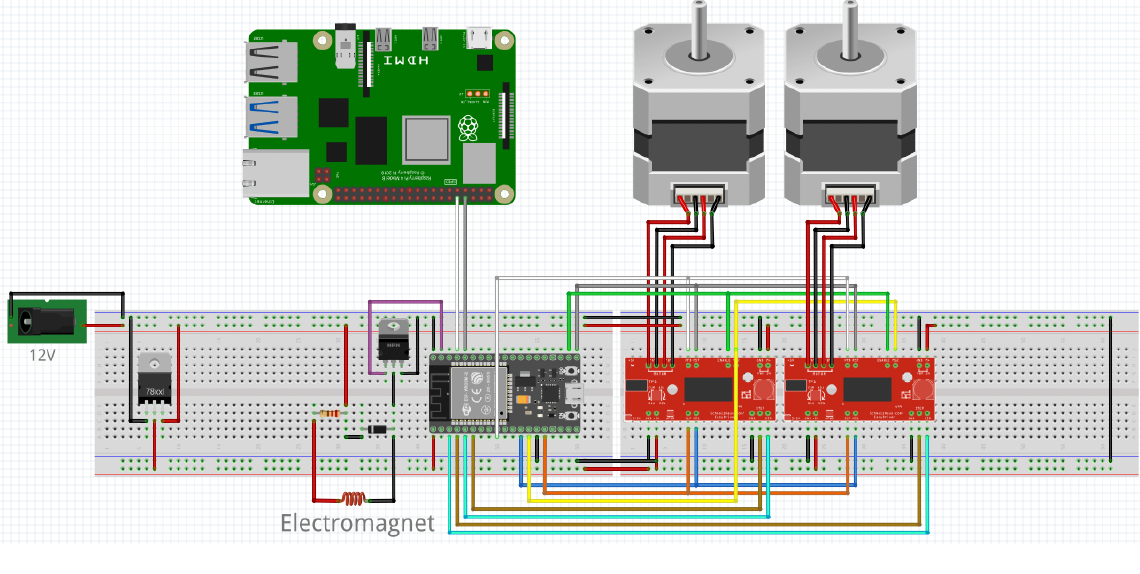

Circuit Schematic

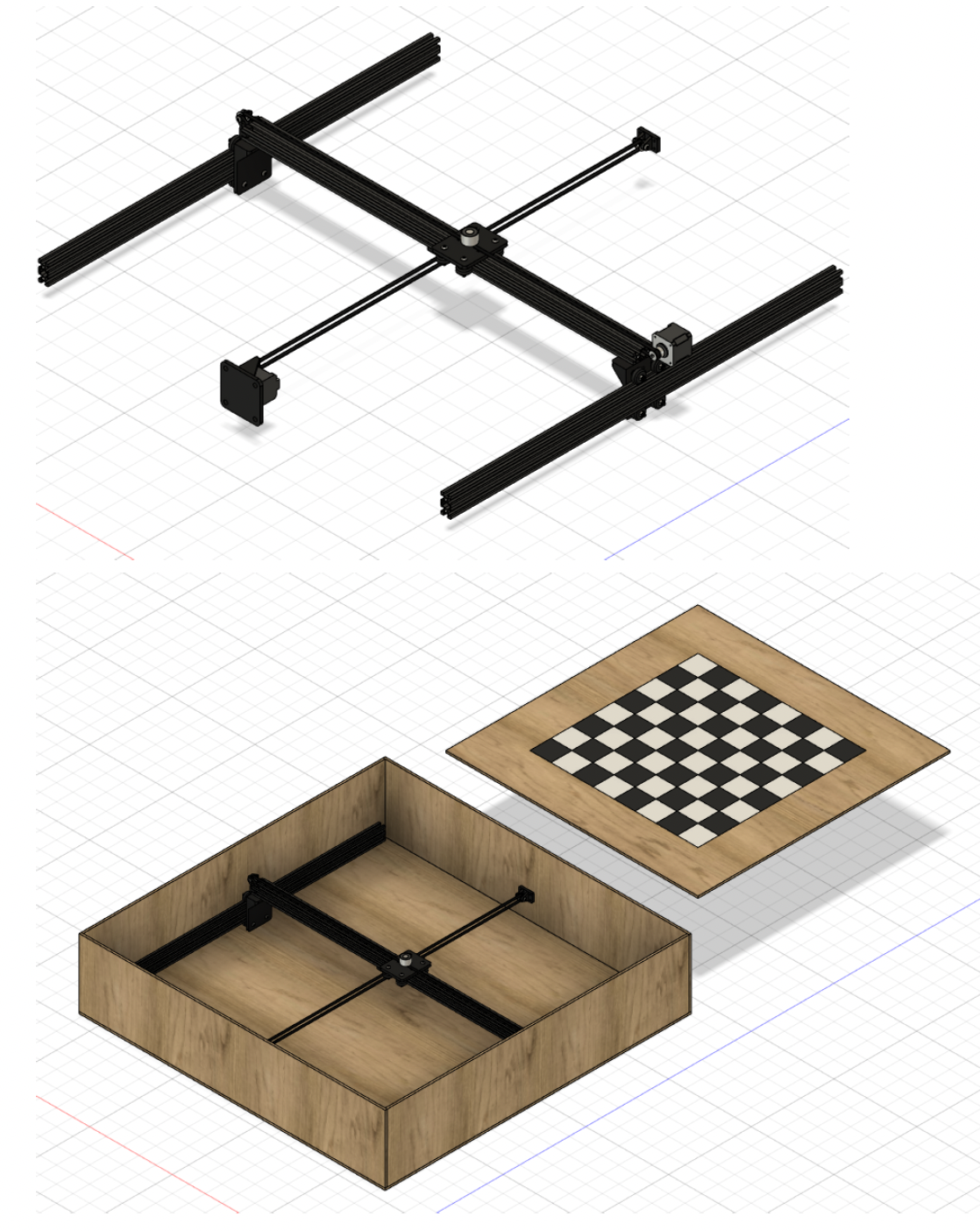

Chessboard/Firmware

Built with Mobirise web page creator